Deep learning (DL) has in recent years been widely used in computer vision and natural language processing (NLP) applications due to its superior performance. However, while images and natural languages are known to be rich in structures expressed, for example, by grammar rules, DL has so far not been capable of explicitly representing and enforcing such structures [Huang18]. In this project, we propose an approach to bridging this gap by exploiting tensor product representations (TPR) [Smolensky90a, Smolensky90b], a structured neural-symbolic model developed in cognitive science, aiming to integrate DL with explicit language rules, logical rules, or rules that summarize the human knowledge about the subject.

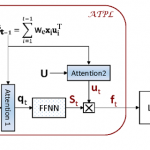

F3-M TPR Learning: a Symbolic Neural Approach for Vision Language Intelligence