PI: Dejing Dou

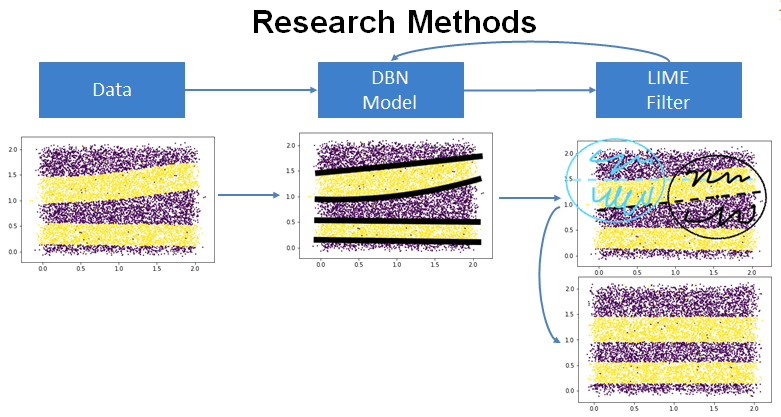

In the first year, we surveyed contemporary explanation methods and our existing work on structured deep learning with ontologies. We developed a novel feature importance scoring method that normalizes LIME-based weights to give more globally representative interpretations and carried out a user study to validate the efficacy and usefulness of our method. We proposed a feature filtering method based on importance and used it to demonstrate our result across standard text and image datasets, showing minimal error gain corresponding to certain dimension reduction levels. Summary publication of our work to date is under review of CIKM’19. In the second year, we will focus on domain ontology-specific deep learning methods, integrating contemporary deep learning model designs with the existing SMASH healthcare ontology and corresponding data, to structure both model input and potentially output in order to develop meaningful semantic explanations that compare favorably with the state of the art. We can measure the utility of our approach in two measures: (1) the improvement in model performance in comparison with other state of the art human behavior explanation models, and (2) the interpretability of the explanations especially as it compares with explanations generated by non-ontology specific explanation generating algorithms (LIME, SHAP, DeepLIFT, etc.). This comparison can be measured through user studies / simulated use studies / other quantitative importance scoring mechanisms outlined in our year 1 work. We plan to extend our ontology-based deep learning (OBDL) algorithms to other application domains related to human behavior prediction, such as Electronic Health Records (PeaceHealth), Drug Information (with Eli Lilly), and Social Media (with Baidu). Designing a model with the ability to learn feature representations while incorporating extant domain knowledge is an urgent demand.