PI: Zhu Li

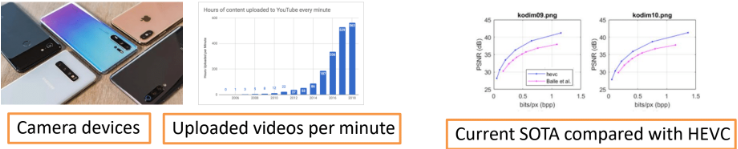

Video content accounts for more than 80% internet traffic and continues growing at an astonishing speed. Numerous of applications include video surveillance, virtual reality, video gaming to name a few pose severe challenges to current video compression solutions. Conventional video coding methods optimize each part separately which might lead to sub-optimal solution. Motivated by the success of deep learning on computer vision tasks, we are proposing deep learning for video compression in an end-to-end manner. Beyond conventional methods, deep learning optimize the parameters in a joint manner which is expected to provide superior performance over traditional techniques. Specific research topics include deep learning coding tools for intra prediction, inter/scable prediction, post-processing, learned adaptive filters for end-to-end image compression, end-to-end video compression with motion field prediction enabled. Existing intra prediction utilizes the boundary reference pixels to predict current block, which might not contain sufficient predictive information. Motivated by intra block which is perfect for recurrent patterns, we propose to combine the intra block copy prediction with reference pixels using a deep neural network to better characterize the varied visual content. In post-processing stage, the residual block contains rich high-frequency information which might be leveraged to guide the loop filter better enhance the reconstructed frame. A deep neural network can be devised to fuse the derived residual features with the reconstructed frame latent representations and generate the enhanced frame. In end-to-end video compression solutions, current solution fails to consider the temporal redundancy existing in motion field. We are proposing end-to-end video compression with motion field prediction. In video-based point cloud compression (V-PCC), a dynamic point cloud is projected onto geometry and attribute videos patch by patch for compression. We propose a CNN-based occupancy map recovery method to improve the quality of the reconstructed occupancy map video. To the best of our knowledge, this is the first deep learning based accurate occupancy map work for improving V-PCC coding efficiency.