PIs: Alina Zare, Paul Gader

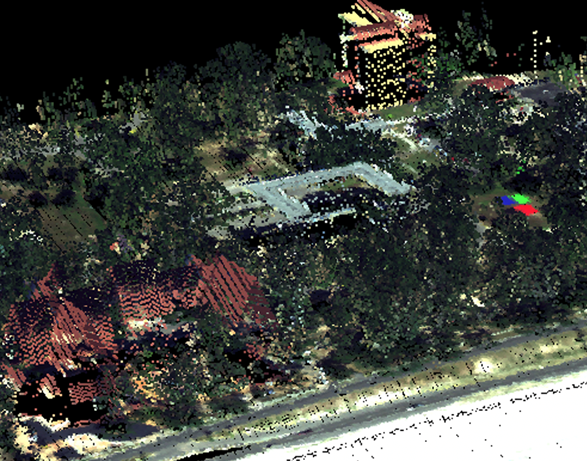

The goal of this work is to translate streams of data from individual sensors into a shared-manifold space for joint understanding and processing. This work includes the investigation of manifold learning, data summarization, and intrinsic dimensionality estimation. In practice, for a given application, processing chains are generally developed for a particular sensor or set of sensors. However, over various regions of the world, the data sets and sensor suites available may vary and have different spatial, spectral, and temporal resolutions. Due to the differing environment conditions and imaging equipment differences, even the same types of sensors can have differences in data distribution. This could be accomplished (with varying degrees of certainty) using LiDAR, hyperspectral imagery or map data that includes building profiles. So, rather than developed individual sensor suite-specific processing chains for determining building locations. We proposed to develop a mechanism for mapping sensor data to a shared manifold space where a single processing chain to achieve the desired goal can be developed. In this way, application implementations can be easily leveraged for any available data. This work will leverage the team’s on-going research in methods to address uncertainty and imprecision.