PI: Joel Harley

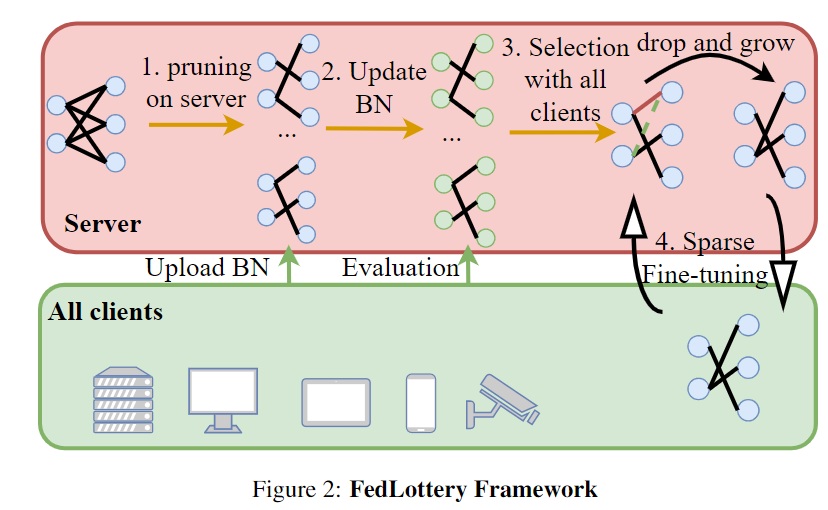

We first theoretically explore the impact of neural quantization on federated knowledge transfer across quantized DNNs and provide the convergence proof of the quantized federated learning. Then we proposed Fed PPQ, an adaptive quantization strategy developed to specialize DNN precision for diverse IoT hardware platforms to trade-off between hardware efficiency and federated learning utility. Then we designed a FedLottery, a framework for finding a common winner ticket for all clients while maintaining a low memory usage and computational cost on devices. FedLottery will over come challenges in federated pruning because the clients maintain a low memory during the training and the data on all clients will help sever to find the correct winner ticket. We adopt the adaptive batch normalization selection (ABNS) to assist framework to find out the best lottery ticket in lottery pool. It is one of the modules in FedLottery. With this algorithm, the converged accuracy of the lottery tickets can be effectively evaluate with only a few iterations of inference on clients. To solve the problem that the winner ticket may not exist in the lottery pool, i.e. the best lottery ticket is not the winner ticket(but it is close),we proposed sparse fine-tuning(SFt). SFt performs sparse-to-sparse training on lottery ticket by dropping and growing connections on certain layers. This algorithm will convert a lottery ticket to a winner ticket with a few operations while maintaining the low memory usage on devices. SFt is so general that it can also be used in pruning before training method to mitigate the affect of initialization and randomness